An Outsourcing Challenge: Creating A Knowledge Base With Legacy Value

By Sue Wollowitz, president, Wollowitz Associates, LLC.

At risk of speaking about an overdiscussed topic, knowledge management continues to be a challenge at the interface between sponsors and CDMOs. We know that regulatory agencies see knowledge management as a major component of quality risk assessment, and understanding of the design space, as quoted in ICH Q10:

At risk of speaking about an overdiscussed topic, knowledge management continues to be a challenge at the interface between sponsors and CDMOs. We know that regulatory agencies see knowledge management as a major component of quality risk assessment, and understanding of the design space, as quoted in ICH Q10:

Product and process knowledge should be managed from development through the commercial life of the product up to and including product discontinuation. For example, development activities using scientific approaches provide knowledge for product and process understanding. Knowledge management is a systematic approach to acquiring, analyzing, storing, and disseminating information related to products, manufacturing processes, and components. Sources of knowledge include, but are not limited to, prior knowledge (public domain or internally documented); pharmaceutical development studies; technology transfer activities; process validation studies over the product life cycle; manufacturing experience; innovation; continual improvement; and change management activities.

However, when outsourcing development activities, documentation of the development history can become particularly challenging because the sponsor does not have 100 percent access to the primary data or the environment in which it was gathered. In addition, changing dynamics at the sponsor company can place additional demands on the documentation of development activities. Here is an example of the complications that can arise particularly for the small companies that depend on CDMOs for a majority of their development activities:

A small startup with limited funds identifies a development candidate and a possible synthesis route in-house. It contracts a CDMO to initially provide GLP and Phase 1 material, and to do early analytical development and stability. Subsequently with some new funding sources, the startup then hires additional CMC (chemistry, manufacturing, and controls) professionals internally. These new members must quickly catch up to learn what has been done at the CDMO and identify new service providers to do preformulation work, solid state characterization, and/or Phase 1 drug product manufacture. After a year of activity, with funds again running low, the startup tightens its belt, the project is put on hold, and these recently hired CMC experts move on to other companies.

Another year later, the project is reactivated, or being reviewed for outlicensing/partnering. The internal people who oversaw the development work are gone. Contracts with the CDMOs have expired, and workers there have changed positions. What remains is a pile of development reports from multiple sources, gathering e-dust. New employees must decide how long it will take to be Phase 2 or 3 ready, whether the formulation or test method is truly robust, if they can support a process change without more work, why the yields are so variable, etc.

Or how about this one?

A CDMO is asked to quote on a project that has already been in a Phase 1 study. It is given summaries of the process, formulation, test methods, etc. in a technical package, perhaps taken right out of the IND (investigational new drug) application. After taking on the project, the CDMO finds that the test method has insufficient resolution or the process cannot be replicated, and has to invest more time (and money) in the project than in its quotation, frustrating both service provider and client. When they finally do complete their work, their reports are subject to numerous rewrites by the client. Two years later, when they are no longer working with the client, the CDMO is asked to provide additional supporting data that has long been archived.

If you have been in our business for a while, you may have found yourself in situations like these, frustrated by the way development activities have been documented at the CDMO and with the sponsor interface. Clearly, a database of poor-quality documents is not knowledge management. We know a legacy perspective on the documentation of development activities is essential. Information and the assessment of that information must be captured in a manner that recognizes how people may use the information in the future, particularly in the absence of its creators.

This is not just a minor issue of convenience and efficiency, avoiding the need to repeat studies. Miscommunication of results can have strategic consequences as well as tactical ones. For example, misunderstanding of stability results or processing conditions used in a study may lead to erroneous decisions on candidate viability, the need for a specific process or presentation, or acceptability of a clinical formulation. It may lead to misjudging the value of an asset, the uniqueness of a formulation, or the probability of success.

CONSIDER ALL USERS

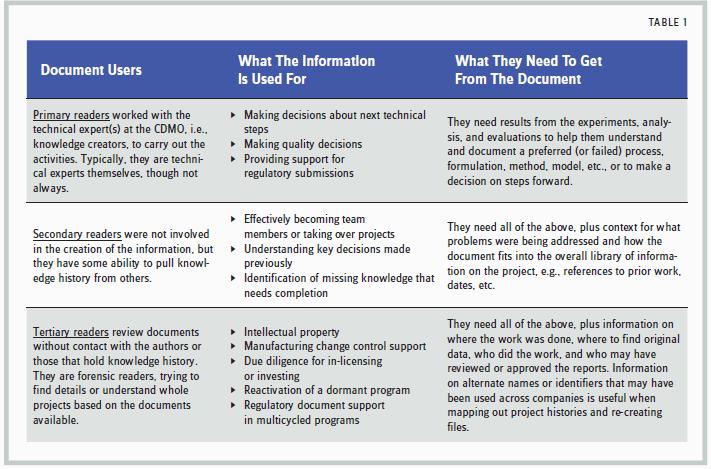

To create legacy-value reports, both sponsors and providers need to keep in mind all the potential users of the documents. As shown in Table 1, the accessibility of usable information impacts the company and the program over many years.

The tertiary, or “forensic,” reader is particularly challenged by poor knowledge management. Even in an electronic world, we still assume that the connectivity between points of data will be made by the project guru, if you will. But project gurus disappear, and the value of the knowledge database is only measured by what can be retrieved — and understood — in their absence.

Development documents that should meet the criteria discussed here include: process and product development reports (summaries of the work that is captured in notebooks and batch records), design-of-experiment studies, method-development reports, stability reports, and campaign reports. Documentation such as data analysis and modeling — which use data from other reports to create new results, draw new conclusions, or communicate technical progress and positions to a broader audience — should also be included.

To ensure that documents have the quality to add legacy value to the knowledge database, they should be assessed against the above needs. Basic questions you should ask include:

- Can anyone look at this document and know what project it is for, why it was done, and what the key conclusions were?

- Can a person skilled in the art, but not familiar with the project, repeat the work based on this document and any referenced materials (that are also in the knowledge base)?

- Are the results sufficiently traceable to primary documents and data that can be used to support regulatory and quality positions?

- Are key conclusions fully supported by the evidence provided in the document (and if not, should they be key conclusions)?

Still, documentation doesn’t have to be complicated or take a lot of time. In some cases, CDMOs already have systematized their reports to a certain level, and introduction of a “context” page by the sponsor before completion or filing is all that is needed. In other cases, CDMO documentation practices may require an upgrade, but I have found that, in the long run, CDMOs end up happy they made the requested changes.

Sometimes a simple checklist is helpful for the review process. For example, are the types of test equipment, raw materials, and excipients adequately defined? Is there CDMO-specific jargon that needs clarification? Are referenced documents also on file with the sponsor? Is sufficient (and clear) raw data and spectra provided to allow the sponsor to reanalyze the results a year from now? As part of good communication, it is helpful for the sponsor to provide such a checklist or the parties to agree on expectations upfront to minimize review cycles, close out reports more quickly, and reduce later requests for archived or unfindable results.

Including specific expectations as part of contractual agreements will allow the CDMO to make sure the proposals are adequately structured and that the data is being collected most effectively to create requisite reports. Of course the format may be proposed as well for editing by the sponsor. In either case, the internal reviewers at the CDMO can subsequently use such “documentation agreements” as their own review criteria for the most efficient closure.

Knowledge management of non-GMP documentation may not fall under the purview of the current data integrity discussions. However, good documentation practices in R&D labs, combined with the translation of primary data into high-quality reports, will render the results and findings useful to all readers during the project and product life cycle and ensure legacy value to the knowledge database.